The I/O Blender has reached an almost legendary status in virtualization circles. It is a term for what happens to storage I/O when in a virtual infrastructure. The I/O Blender is created when potentially hundreds of virtual machines across dozens of virtual hosts all access the same storage volumes at the same time. It puts duress on every aspect of the storage infrastructure but especially on the hard disk system as it has to thrash back and forth to respond to all these requests.

The default answer for the I/O Blender problem has been to include flash based solid state disk (SSD) technology into your virtual infrastructure. But how do you determine if your virtual environment really needs SSD? And, if it does, where and how should you implement that investment? SSD technology is viewed by many as a performance machete that eliminates many storage I/O performance problems. However, it is an expensive technology and your budget may require that you take a more surgical approach; leveraging the technology more like a performance scalpel.

If your budget requires a more precise approach, how do you gather the information to make the right decision? In the past, pre-virtualized data center IT planners could possibly get by with standard operating system utilities like PerfMon to capture the data they needed to identify performance problems. But in the virtualized world where almost every host component is shared with at least a dozen other virtual machines (VMs) and every storage component is shared across dozens of physical hosts, identifying performance bottlenecks is much more difficult.

What Do You Need To Track?

When deciding if an SSD makes sense for a non-virtualized application, the information to capture followed a linear path of CPU utilization, network utilization and storage performance metrics like queue depth and latency. While this information could be captured manually, it would typically be measured at specific points in time, not as a historical average. In the virtual context, all this information needs to be captured and then correlated against the same information from all the other VMs across all the physical hosts in the environment.

As a result, IT planners trying to determine the need for a SSD investment in a virtual environment will require the use of software tools to help capture and correlate that information. Manual spreadsheets are no longer adequate for conducting performance analysis.

CPU Utilization

The first step in any storage performance analysis is to determine if there truly is a storage performance issue. CPU utilization is generally a good indicator of this. If CPU utilization is high, then in most cases this is not a storage performance issue but either a lack of CPU resources or poor application programming.

In the virtual use case, this means capturing CPU utilization, both at the host and down to the VM level over a period of time. When a performance problem occurs, this performance history can be referenced to see if there was a spike in CPU utilization at the same moment the bottleneck took place. If the CPU utilization was under 50% during that moment, then more than likely there was a storage bottleneck causing the performance issue.

VMs CPU usage compared to the host they are running on

Storage Controller Utilization

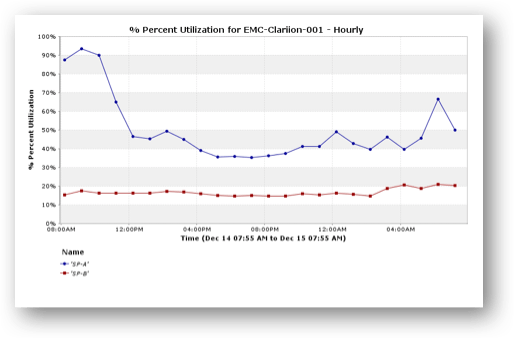

The storage controller is the compute engine for the storage system. The utilization of its CPU can be easily measured, but like host CPU utilization, this parameter needs to be captured over a period of time so it can be compared to the moment when an application performance issue occurred. If the CPU inside the controller is running at greater than 60% utilization, then adding faster media like SSD will likely not help improve performance.

Controller Utilization over time

If adding SSD to the current system won’t help, a decision has to be made to either upgrade the existing system or else add an additional storage system. Adding an additional system will require using something like Storage vMotion to migrate the performance-demanding VMs to that new system.

A cost effective alternative to a storage system upgrade could also be adding server based SSD to cache the performance demanding VMs locally to the physical server. Most server side caching software is read-only, so you’ll need to determine if the performance demanding VMs in question have a heavier read I/O mix. If they do, a server side read cache with server side SSD can be a very cost effective resolution to performance problems, especially if there are only a handful of VMs experiencing the problems.

If there are more than a handful of VMs that need the performance boost and if those VMs are scattered across several hosts, deploying an SSD appliance, as described above, specifically for these VMs may be a better approach.

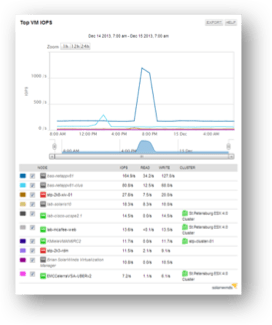

Deciding between a server-based SSD or a network-based SSD requires understanding VM performance demands on an individualized VM basis. A tool that can provide a quick list of the top I/O consumers, per storage system regardless of the host it is on, is essential, as well as the aforementioned need to determine read/write mix on a per VM basis.

Storage Media Utilization

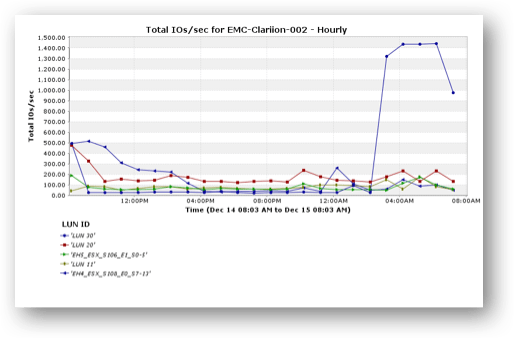

If the Host CPU and the Storage Controller CPU are not being fully utilized, then the next step is to determine if there is a device I/O problem. This is done by examining the IOPS and bandwidth utilization of the storage system; typically on a per LUN basis.

The first thing to look for is a few demanding VMs that are creating most of the I/O for a particular set of disks on the storage system. This requires a tool that can deliver an end-to-end view of the virtual environment, tracking a VM directly to the disks that it is using. With this tool, certain VMs can be moved to new LUNs to obtain a substantial increase in response time. This capability can save a substantial amount of money versus going to SSD.

If the performance problem is more widespread than just a few VMs or if those few VMs need more performance than a dedicated disk can provide, then the performance capability of the media itself needs to be examined. Poor performance is often only evident for a few minutes of time, a narrow window to capture with manual tools. Specifically you want to find a period of time where either the IOPS, the bandwidth or both essentially flat line from a performance perspective. This means that the storage system has hit a performance wall, and, assuming storage controller CPU utilization did not spike, adding flash SSD to it will help performance. The flash can be added with the confidence that it will justify the investment since it has been pre-determined that server and storage CPU resources are available to drive more data to a faster device.

Flat Lining LUN

The ability to incrementally add flash to the existing infrastructure instead of resorting to purchasing a new system is an important justification of improving the monitoring process. This is because adding flash, assuming it is flash capable, to a storage system provides a performance boost to the entire environment. This process, assuming your vendor doesn’t overcharge for a flash upgrade, should be less expensive than integrating a new SSD appliance and less complex than developing a multi-tiered server side SSD with shared storage infrastructure.

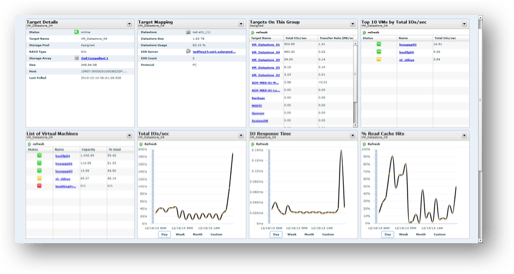

As mentioned the fact that a performance bottleneck window may be as small as a few minutes or seconds explains why a solution that can provide an active analysis is important. Performance monitoring in a virtual environment essentially requires a TiVo like functionality where resource consumption can be “replayed” to find the point at which it occurred. The figure below shows how this can be achieved by looking at current and historical usage on a single LUN, including which VMs are driving the load and what other LUNs are sharing the disks.

Historical LUN Usage By VM

Storage Network Utilization

Once the storage system performance attributes are determined and the host is ruled out as a performance culprit, a final area to explore is the storage network itself. This is important because it will better answer where SSD should be deployed, in the server or in the storage system. If the network is part of the performance problem, it may justify a network upgrade or the use of server-side flash as described above.

A storage network problem is likely if there is plenty of variation in the disk IOPS but both host and storage controller CPU utilization rates are low. This more than likely indicates a storage network performance problem. Essentially the host is waiting on its requests to be responded to and the storage system is waiting on requests from the hosts. The problem is then within the interconnect; the storage network. This can be solved by either a network upgrade or leveraging server-side flash. As with the other upgrades, it does not mean that the entire network needs to be upgraded but a higher performance path needs to be put in place for the more demanding VMs data to travel.

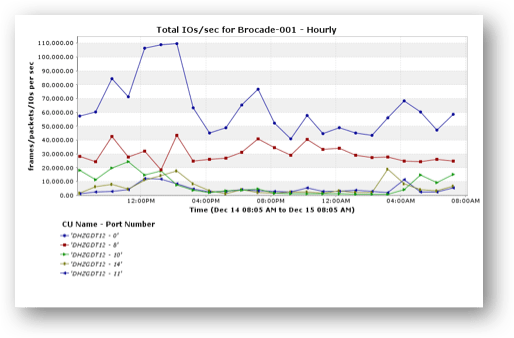

FC Switch port performance

Conclusion

Because IT is stretched so thin, the default answer to performance problems is to throw hardware at the problem. This is a choice also popular with vendors. In the modern data center, this means throwing flash at the problem, which is an expensive technology to toss around. Flash, because of its high performance and low latency, often fixes some of these problems. But with proper analysis this “fix” can be more targeted, providing better results and lower cash expenditures.

Sponsorship: This white paper was sponsored by SolarWinds. SolarWinds provides IT management software for IT professionals including storage and virtualization management products. You can find out more about SolarWinds products at http://www.solarwinds.com/

[…] embarked on a series of articles whose purpose is to answer these questions with our article “How Do I Know My Virtual Environment is Ready for SSD?”. In the coming weeks we will be publishing articles that discuss knowing if your database […]

[…] operating system and hypervisor tools or a third party product like we discussed in the article, “How Do I Know My Virtual Environment is Ready For SSD”. The key difference in an All-Flash world vs a Hybrid world is how often that analysis needs to […]

[…] a recent blog post, Storage Switzerland lead analyst Georg Crump describes various methods for evaluating if your […]

George, these are great insights into identifying virtualization performance bottlenecks, and an important step in helping storage managers determine the most effective solution.

Something that’s important to consider is that there isn’t just a single flash-based solution to improve performance in virtualized environments, but rather an array of possible solutions that can be deployed as hardware and/or software, in servers and/or storage arrays, and deliver varied degrees of improvement, with respective scaling costs.

This is something that we talk about extensively with our customers, as IT professionals are often unsure about how to assess the value of what would be the best implementation in their environment and within their budget.

To continue this discussion and shed some light on the various options, I put together a blog post: ‘Comparing SSD Options for Virtualized Environments: the Better-Best Approach’ – you can read it in its entirety on SanDisk’s Enterprise blog: http://itblog.sandisk.com/comparing-ssd-options-for-virtualized-environments-the-better-best-approach/